The Race to Catch Up with Nvidia: Innovations in AI Accelerators from Groq, Cerebras, and AMD

Introduction

The artificial intelligence (AI) hardware market is experiencing unprecedented growth, driven by the increasing demand for powerful and efficient AI accelerators. Nvidia has long dominated this space, with its GPUs powering many of the world's most advanced AI applications, holding over 90% of the data center AI chip market. However, companies like Groq, Cerebras Systems, and AMD are introducing innovative technologies that challenge Nvidia's supremacy, particularly in AI inference, where speed and cost-efficiency are critical.

This article explores the cutting-edge AI accelerators developed by Groq, Cerebras, and AMD, detailing their architectures, performance metrics, and recent developments as of July 2025. Drawing from semiconductor industry analyses, academic papers, and the latest news, we examine how these companies are positioning themselves to compete with Nvidia.

Groq: Tensor Streaming for Lightning-Fast Inference

Groq, founded in 2016 by former Google engineers, including Jonathan Ross, a designer of Google's Tensor Processing Unit (TPU), has developed the Language Processing Unit (LPU). The LPU leverages a Tensor Streaming Processor (TSP) architecture, optimized for low-latency, high-throughput AI inference tasks, particularly for large language models (LLMs).

According to benchmarks, the LPU achieves up to 300 tokens per second on Llama2-70B and approximately 750 tokens per second on smaller 7B models, significantly outperforming Nvidia's H100, which typically processes 30-60 tokens per second. The LPU's design, featuring 230 MB of on-chip SRAM and 80 TB/s memory bandwidth, enables rapid data access and processing, making it ideal for real-time applications like chatbots, recommendation systems, and predictive analytics.

Groq's rack-mounted LPU node designed for high-throughput AI inference

Groq's rack-mounted LPU node designed for high-throughput AI inference

Key Features of Groq's LPU

- Architecture: Tensor Streaming Processor with 750 TOPS INT8 and 188 TFLOPs FP16 peak performance.

- Memory: 230 MB SRAM, offering high-bandwidth, low-latency access.

- Performance: 17.6x faster than Nvidia's V100 at batch size 1 for image inference and 2.5x faster at larger batches; 300 tokens per second on Llama2-70B.

- Power Efficiency: Claims up to 10x better performance per watt than GPUs, with a TDP of around 150 W.

- Limitations: Limited memory capacity requires multiple LPUs for larger models, increasing complexity and cost.

Recent developments highlight Groq's growing influence. In July 2025, Groq launched its first European data center in Helsinki, Finland, in partnership with Equinix, capitalizing on rising demand for AI services in Europe. Additionally, Meta's LlamaCon event in April 2025 announced a tokens-as-a-service offering powered by Groq, alongside Nvidia and Cerebras, indicating strong industry adoption. However, Groq's focus on inference rather than training and its reliance on multiple chips for large models may limit its versatility compared to Nvidia's GPUs.

Cerebras: Wafer-Scale Engines for Massive Parallelism

Cerebras Systems has taken a revolutionary approach with its Wafer-Scale Engine (WSE), a massive chip that integrates multiple dies on a single wafer. The WSE-3, released in 2024, features 900,000 cores, 44 GB of on-chip SRAM, and an unprecedented 21 PB/s memory bandwidth—7,000 times higher than Nvidia's H100. This architecture allows Cerebras to store entire AI models on a single chip, eliminating memory bottlenecks that plague GPU-based systems. Benchmarks show the WSE-3 delivering 1,800 tokens per second for Llama 3.1-8B and 57x faster performance than GPU-based solutions for the 70B DeepSeek model.

The Cerebras WSE-3: The world's largest chip with 900,000 cores

The Cerebras WSE-3: The world's largest chip with 900,000 cores

Cerebras' inference service, launched in August 2024, has been adopted by companies like Perplexity and Mistral AI, with claims of outperforming Nvidia's Blackwell chips in specific scenarios, such as 2,500 tokens per second on the 400B Llama 4 Maverick model. The company's partnership with Dell, announced in June 2024, integrates WSE-3 into Dell's PowerEdge servers, expanding its enterprise reach. Cerebras also filed for an IPO in September 2024, signaling its ambition to scale operations and challenge Nvidia's market dominance.

Key Features of Cerebras' WSE-3

- Architecture: Wafer-scale chip (46,225 mm²) with 900,000 cores and 44 GB SRAM.

- Memory Bandwidth: 21 PB/s, enabling rapid data processing for large models.

- Performance: 250 PFLOPS (FP8/FP16) per rack, 1,800 tokens per second on Llama 3.1-8B, and 57x faster than GPUs on DeepSeek-R1.

- Power Consumption: High TDP of 20 kW, but offers superior performance per watt for large-scale tasks.

- Limitations: High power consumption and cost (estimated $5M per rack) may restrict adoption in smaller deployments.

Cerebras' wafer-scale approach excels in training and inference for massive models, but its high power requirements and cost pose challenges for widespread adoption. The company's focus on niche, high-performance applications, such as pharmaceutical research with GlaxoSmithKline, underscores its potential in specialized markets.

AMD: Advancing with the MI300 Series

AMD has emerged as a strong contender in the AI accelerator market with its Instinct MI300 series. The MI325X, launched in October 2024, is designed to compete directly with Nvidia's Blackwell chips, offering 288 GB of HBM3E memory and 5.2 TB/s bandwidth. AMD claims the MI325X delivers up to 40% better inference performance than Nvidia's H200 on Meta's Llama 3.1 model, with benchmarks showing 4,598 tokens per second for Mixtral-8x7B. The MI350 series, planned for 2025, and MI400 for 2026, aim to further close the performance gap using advanced TSMC N2P processes.

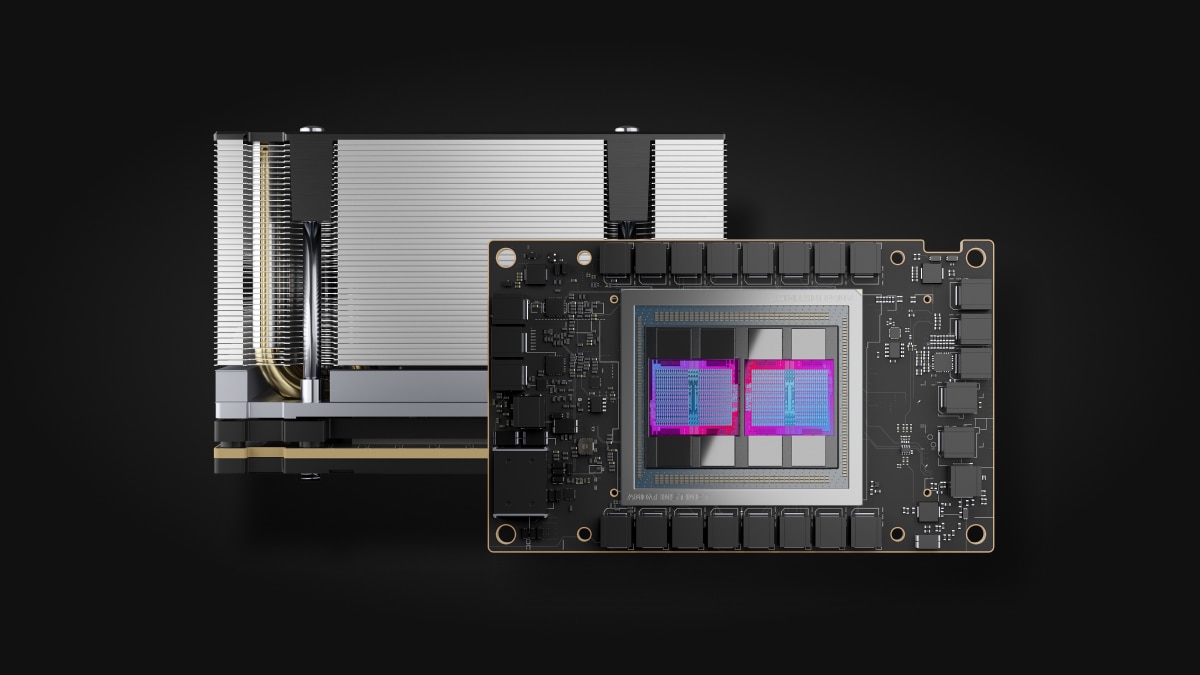

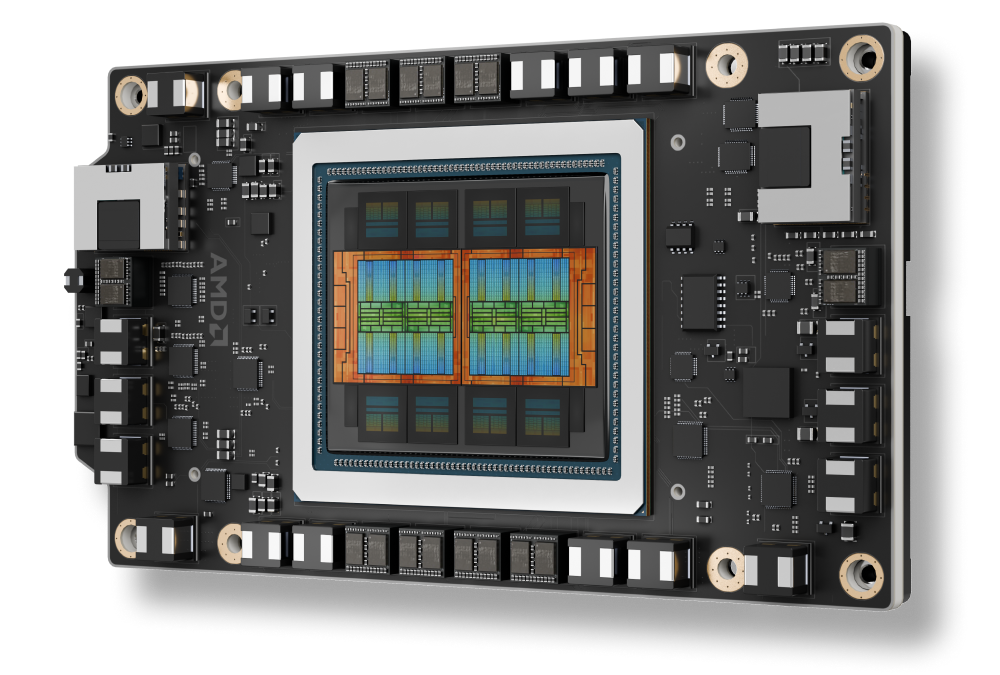

AMD Instinct MI350X: Next-generation AI accelerator with 288 GB HBM3E memory

AMD Instinct MI350X: Next-generation AI accelerator with 288 GB HBM3E memory

AMD is also enhancing its ROCm software platform to rival Nvidia's CUDA, addressing a key barrier to adoption. Partnerships with Microsoft, Meta, and OpenAI demonstrate growing market traction, with the MI300X powering virtual machine instances on Microsoft Azure and Oracle Cloud Infrastructure. AMD's acquisition of ZT Systems in August 2024 for $4.9 billion bolsters its expertise in rack-scale AI solutions, positioning it to compete in large-scale deployments.

Key Features of AMD's MI325X

- Architecture: Chiplet-based design with 8 Accelerator Complex Dies (XCDs).

- Memory: 288 GB HBM3E with 5.2 TB/s bandwidth, surpassing Nvidia's H100 (80 GB, 3.35 TB/s).

- Performance: 20.9 PFLOPS (FP8), 40% faster inference than H200 on Llama 3.1.

- Power Consumption: 750 W TDP, slightly higher than Nvidia's H100 (700 W).

- Limitations: Software ecosystem (ROCm) is less mature than Nvidia's CUDA, potentially limiting developer adoption.

AMD's focus on cost-effective, high-performance accelerators makes it a viable alternative to Nvidia, particularly for enterprises seeking to diversify their AI hardware suppliers. However, Nvidia's software dominance remains a significant hurdle.

Comparative Analysis

The following table summarizes key metrics for Groq, Cerebras, AMD, and Nvidia accelerators, highlighting their strengths and weaknesses:

Key Performance Metrics Comparison

| Metric | Groq LPU | Cerebras WSE-3 | AMD MI325X | Nvidia H100 |

|---|---|---|---|---|

| FP8 Performance | 820 TOPS | 250 PFLOPS (Per Rack) |

20.9 PFLOPS | 3,958 TFLOPS |

| Memory Bandwidth | 80 TB/s | 21 PB/s | 5.2 TB/s | 3.35 TB/s |

| Inference Speed | 300 tokens/s Llama2-70B |

1,800 tokens/s Llama 3.1-8B |

4,598 tokens/s Mixtral-8x7B |

30-60 tokens/s |

| Power Consumption | ✅ 300 W | ⚠️ 20 kW | 750 W | 700 W |

| Software Support | GroqWare ❌ Limited |

CSL ❌ Experimental |

ROCm ⚠️ Improving |

CUDA ✅ Extensive |

Legend:

- ✅ Best in class / Advantage

- ⚠️ Moderate / Caution needed

- ❌ Limited / Disadvantage

- Highlighted values indicate standout performance metrics

Performance

- Cerebras WSE-3: Excels in large-scale model processing due to its massive on-chip memory and bandwidth, ideal for training and inference of frontier models.

- Groq LPU: Leads in low-latency inference, making it suitable for real-time applications, but requires multiple units for larger models.

- AMD MI325X: Offers a balance of performance and cost, with strong inference capabilities and growing adoption in data centers.

- Nvidia H100: Provides versatile performance across training and inference, with unmatched software ecosystem support.

Power Efficiency

Groq's LPU is highly efficient, with claims of 10x better performance per watt than GPUs for specific tasks. Cerebras' WSE-3, despite its high 20 kW TDP, offers superior performance per watt for large models due to its high utilization of on-chip memory. AMD's MI325X is slightly less efficient than Nvidia's H100, but its higher memory capacity compensates for certain workloads.

Software Support

Nvidia's CUDA platform remains the gold standard, with extensive support for frameworks like TensorFlow, PyTorch, and vLLM. AMD's ROCm is improving but lacks the same level of maturity, while Cerebras' CSL and Groq's GroqWare have limited framework support, posing challenges for developers.

Market Adoption

Nvidia dominates with over 90% market share, but Groq, Cerebras, and AMD are gaining traction. Groq's partnership with Meta and Cerebras' collaboration with Dell and Microsoft highlight their growing enterprise presence. AMD's adoption by hyperscalers like Microsoft and Oracle positions it as a strong second option.

Recent Developments and Future Outlook

As of July 2025, the AI accelerator market is highly dynamic:

- Groq: Expanded globally with a Helsinki data center and secured a $640 million funding round in August 2024, valuing the company at $2.8 billion. Its focus on inference and partnerships with Meta suggest a strong niche in real-time AI applications.

- Cerebras: Filed for an IPO in September 2024 and launched data centers in Minneapolis, Oklahoma City, and Montreal, with 512 CS-3 systems planned for 2025. Its collaboration with Dell and adoption by companies like Mistral AI bolster its market position.

- AMD: Accelerated its product roadmap with the MI325X, MI350 (2025), and MI400 (2026), and acquired ZT Systems to enhance rack-scale solutions. Its partnerships with Microsoft, Meta, and OpenAI indicate growing acceptance in the data center market.

- Nvidia: Maintains its lead with the Blackwell architecture and NVLink Fusion, allowing integration with rival chips, but faces increasing competition in inference.

The shift toward AI inference, driven by the deployment of LLMs in real-world applications, favors Groq and Cerebras, which excel in speed and efficiency for these tasks. AMD's balanced approach and software improvements position it to capture a significant share of the projected $500 billion AI accelerator market by 2028. However, Nvidia's robust ecosystem and market dominance make it a formidable competitor, and its recent software advancements could further solidify its position.

Looking ahead, the competition will hinge on software development, cost-effectiveness, and scalability. Groq and Cerebras must expand their software ecosystems to rival CUDA, while AMD needs to maintain its aggressive product roadmap. The growing adoption of these accelerators by hyperscalers and enterprises suggests a diversifying market, with each company carving out a niche to challenge Nvidia's dominance.

Conclusion

The AI accelerator landscape is becoming increasingly competitive, with Groq, Cerebras, and AMD introducing innovative technologies that address specific needs in AI computing. Groq's LPU offers unmatched inference speed, Cerebras' WSE-3 excels in large-scale model processing, and AMD's MI325X provides a cost-effective alternative with growing market traction. While Nvidia remains the leader, these companies are redefining the possibilities of AI hardware, driving a future where diverse architectures coexist to meet the demands of an AI-driven world.

References

- Comparing AI Hardware Architectures: SambaNova, Groq, Cerebras vs. Nvidia GPUs & Broadcom ASICs

- A Comparison of the Cerebras Wafer-Scale Integration Technology with Nvidia GPU-based Systems for Artificial Intelligence

- A Review on Proprietary Accelerators for Large Language Models

- Nvidia challenger Groq expands with first European data center

- Meta Enters The Token Business, Powered By Nvidia, Cerebras And Groq

- Cerebras introduces next-gen AI chip for GenAI training

- Cerebras partners with Dell to challenge Nvidia's AI dominance

- AMD launches MI325X AI chip to rival Nvidia's Blackwell

- Cerebras, an A.I. Chipmaker Trying to Take On Nvidia, Files for an I.P.O.

- AMD Delivers Leadership AI Performance with AMD Instinct MI325X Accelerators

- Dell and Cerebras Expand AI Partnership for Enterprise Solutions

- Groq Raises $640 Million in Series D Funding

- AMD Unveils Aggressive AI Chip Roadmap Through 2026

- Benchmarking the Latest AI Accelerators: Performance Analysis

- Cerebras Expands Global Footprint with New AI Data Centers

- Cerebras Systems S-1 Filing Details AI Market Strategy

- Nvidia's Software Moat: CUDA Ecosystem Analysis

- The Rise of Specialized AI Hardware: Market Analysis 2025

- Power Efficiency in AI Accelerators: A Comparative Study

- Nvidia Blackwell Architecture: Technical Deep Dive

- Microsoft Azure Adopts AMD MI300X for AI Workloads

- GlaxoSmithKline Partners with Cerebras for Drug Discovery